AI For Sign Language Circuit Diagram Write better code with AI Security. Find and fix vulnerabilities A computer vision based gesture detection system that automatically detects the number of fingers as a hand gesture and enables you to control simple button pressing games using you hand gestures. image, and links to the sign-language-recognition-system topic page so that

The deaf and mute population has difficulty conveying their thoughts and ideas to others. Sign language is their most expressive mode of communication, but the general public is callow of sign language; therefore, the mute and deaf have difficulty communicating with others. A system that can correctly translate sign language motions to speech and vice versa in real time is required to overcome

PDF Sign Language Recognition Using Deep Learning Circuit Diagram

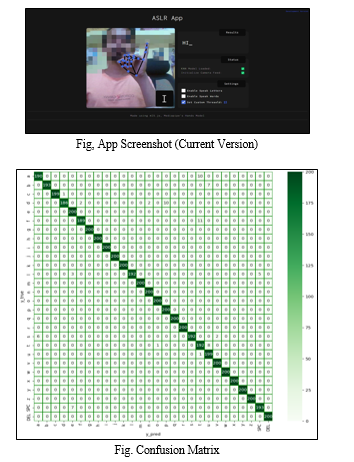

The AI-powered sign language recognition project aims to develop, implement, and evaluate an advanced system designed to accurately interpret and translate sign language gestures into text or speech. This project involves the selection and analysis of a diverse range of sign language gestures, focusing on various contexts and applications.

Project focused on the detection and interpretation of American Sign Language (ASL) using deep learning and computer vision techniques. The research integrates MediaPipe, a framework developed by Google for hand tracking, with LSTM (Long Short-Term Memory) neural networks, to create a system capable of recognizing sign language gestures.

Sign language interpretation using machine learning and artificial ... Circuit Diagram

SLR technology uses AI-driven algorithms to interpret gestures, body movements, and facial expressions into spoken or written language. This process requires complex machine learning models trained on thousands of hours of sign language data. These systems rely heavily on deep learning frameworks to understand subtle nuances, like finger placement or hand motion speed. The Sign-Lingual Project is a real-time sign language recognition system that translates hand gestures into text or speech using machine learning and OpenAI technologies. It leverages advanced tools like OpenAI's Language Model (LLM) for typo correction and contextual understanding, as well as OpenAI's Whisper for text-to-speech conversion.

Sign Language Recognition has grown to be increasingly important as a mean to improve the access and effective communication for the hearing impaired through Human Computer Interaction (HCI). This paper presents a hybrid approach, unifying deep learning with graph theory, that effectively recognizes sign language gestures in terms of recent state-of-the-art advances in artificial intelligence

lingual: Computer vision based sign language to ... Circuit Diagram

The goal of this deep learning project is to create a model for sign language recognition using a convolutional neural network (CNN), utilising the Keras package and OpenCV for live picture capture. "By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Dr. Stella Batalama, Dean of the FAU College of Engineering and Computer Science.. Dr. Batalama emphasizes that this technology could make daily interactions in education, healthcare, and social settings more seamless and

![[Updated] AI Sign : Sign Language for PC / Mac / Windows 11,10,8,7 ... Circuit Diagram](https://is4-ssl.mzstatic.com/image/thumb/PurpleSource123/v4/ba/69/a1/ba69a1c4-35f5-c512-b9d3-27fd1b5dcdd0/54c8c7e7-d4e1-44c1-ba63-f208ba304789_1.jpg/392x696bb.jpg)